z/OS Dataset Considerations for Migrating from Endevor to Git

When moving from Endevor to Git with IBM Dependency Based Build, it’s a good time to reevaluate your dataset locations and naming conventions. IBM Dependency Based build, when utilized in a pipeline environment (such as with GitLab runners or Jenkins), will produce output such as load modules, listings, as well as non-compiled but deployable artifacts such as JCL, REXX and control cards. Endevor does this during its ‘generate’ step.

We recommend the use of new high-level qualifiers for the DBB compiles, separate from Endevor. One reason is that the naming convention your organization may not be entirely intuitive. For example, if your Endevor subsystem is for an accounting system, and the high-level qualifier does not reflect anything related to accounting. A new naming convention that is more intuitive will ensure improved maintenance in the future. For example, instead of a HLQ of “D4YYY”, change it to “ACCT”. That makes a lot more sense! While this is a usability issue, the stronger argument is more technical:

The DBB datasets are tied to the dependency information in the DBB database. When DBB does a compile, it records git checksums in its DBB database. A customer should use different high-level qualifiers than the original Endevor datasets as Endevor has a similar function. That also preserves the original Endevor datasets should we have to roll back from DBB to Endevor during the migration (this should be part of any contingency plan for risk management). If you continue to use the old Endevor datasets for Git, and then suddenly use Endevor to compile, it will invalidate the dependency information that DBB has in its database. This can lead to undesirable effects during a DBB pipeline build such as long compile times, or potential warning or failures that may indicate data corruption (in the dependency information stored in the databases).

In Endevor, for many organizations, it’s common to use Endevor ship processors to move modules to target datasets from which programs are executed. In small instances, the generated datasets are where the load modules are also executed, which is not a recommended practice even for Endevor.

Another thing to consider, is if you have COBOL 4 compiled load modules that are being executed in PDS’s. COBOL 6 requires PDSE (Libraries). You cannot compile a COBOL 6 module into an old style PDS. This produces an opportune time to create load PDSE datasets under different high-level qualifiers.

Here is a summary of some pros and cons of this approach:

Pros (using different libraries):

· Endevor datasets remain untouched.

· Makes it simple to roll back to Endevor in a contingency situation.

· Makes it an option to manage source in both Endevor and Git simultaneously (though not recommended).

· Protects Git compile datasets from possible contaminations from Endevor

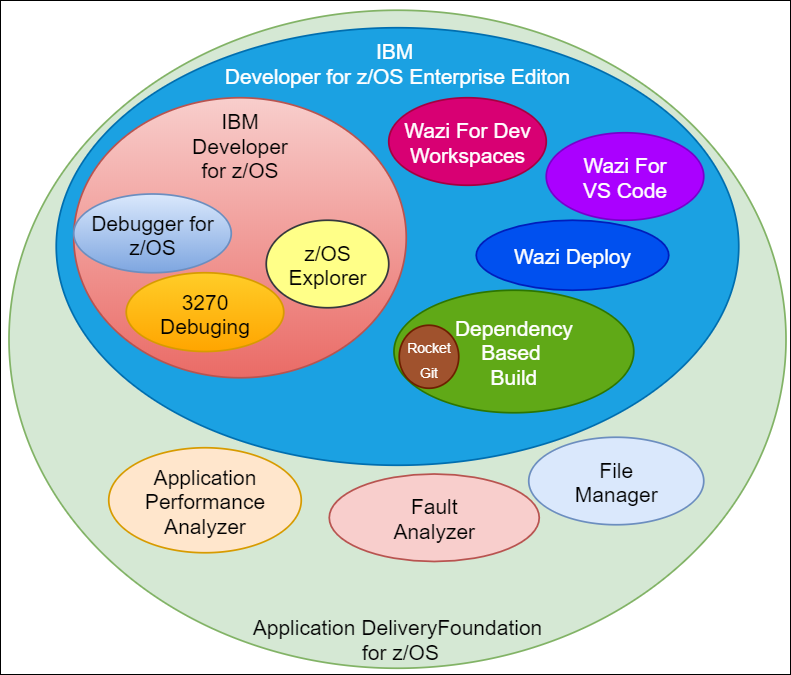

· Ensures traceability of who deployed to what dataset (Wazi Deploy or Urbancode Deploy would deploy to entirely different datasets than Endevor).

· Ensures traceability of the migration. We can go back to the original source of record (Endevor) to ensure the migration of each element without fear of it having been corrupted or contaminated.

· Offers opportunities to use names more representative of the actual applications running in the libraries.

· Allows opportunities to change operations to eliminate long standing problems with library structures.

· Ensures all target datasets are COBOL 6 compatible PDSE’s (not PDS’s). COBOL 6 compiled load modules cannot run in a PDS. COBOL 4 compiled load modules, however, can run in either a PDS or PDSE.

CONS:

· Requires updating batch JCL’s to use the new load module datasets. This is not a small con, but we can update many batch JCL’s using IDz’ tooling for mass text replacement.

· Requires more data storage (at least double since there would be a 1-1 replacement dataset)

· May require RACF updates to apply similar rules to the new high level qualifiers.

· Requires minor re-education of the developers and systems programmers.

· Need to copy existing load modules from original to new datasets which can be accomplished with IDCAMS REPRO job. However, this is ideal because we can issue a DBB scanAll (via zAppBuild) to link up the already compiled modules to the migrated source in Git. This populates the DBB database with initial dependency information associated with the copied data.

Other things to consider:

· What are the ramifications of decommissioning Endevor to the existing datasets?

· How would you view the existing listings compiled under Endevor?

If you are in the middle of planning for such a migration and need some advice, you can contact us for help.